Publications

Multi-Column Layout User Guide

This guide explains how to use the multi-column layout feature on your website.

Basic Syntax

Use the multi-col identifier to create a multi-column layout:

``````multi-col

```col

Column 1 content

```

```col

Column 2 content

```

``````Special Column Styles

Bordered Column (col-border)

Adds a border to the specified column:

``````multi-col

```col-border

**Bordered Column**

This column will have a nice border effect

```

```col

Normal column content

```

``````Shaded Column (col-shade)

Adds a shadow effect to the specified column:

``````multi-col

```col

Normal column content

```

```col-shade

**Shaded Column**

This column will have a shadow and hover effect

```

``````Syntax Hierarchy Rules

Choose the appropriate number of backticks based on content complexity:

| Content Type | Number of Backticks | Example Syntax |

|---|---|---|

| Simple Text | 6 | col, col-border, col-shade |

| Contains Code Blocks | 9 | Same as above, but use 9 backticks for the outer block |

| Complex Nesting | 12 | Same as above, but use 12 backticks for the outer block |

Examples

Basic Two-Column Layout

左列

- 项目1

- 项目2

- 项目3

右列

- 步骤一

- 步骤二

- 步骤三

Multi-Column with Code Blocks

Python Code Example

def example():

print("Hello World!")

return TrueConfiguration Example

# Configuration file

settings:

enabled: true

mode: "production"Three-Column Image Display

Fairy

Fairy Japanese Goblin

Japanese Goblin Mage

MageBorder and Shade Demonstration

📋 Important Note

This is an important information box with a border.

- Important info 1

- Important info 2

- Important info 3

The border color automatically adapts to the theme

🎨 Design Highlight

This is a design showcase area with a shadow.

It has an animation effect on mouse hover:

- Deeper shadow

- Slightly raised

Perfect for highlighting important content!

📝 Normal Content

This is normal column content with no special styling.

Used for comparison, you can see:

- The distinction of the bordered column

- The depth of the shaded column

- The simplicity of the normal column

Features

- ✅ Auto-detects column count (2-8 columns)

- ✅ Responsive design (stacks to a single column on mobile)

- ✅ Full syntax highlighting support

- ✅ Supports any Markdown content

- ✅ Flexible backtick hierarchy system

- ✅ Bordered column (col-border)

- ✅ Shaded column (col-shade)

Best Practices

- Choose the Right Backtick Count: Select based on content complexity

- Keep Column Content Balanced: Try to keep the length of each column similar

- Mobile-Friendly: Consider the display on small screens

- Clear Semantics: Use meaningful titles for columns

Shortcode Alternative

If you prefer not to use the code block syntax, you can also use a shortcode:

Column 1

Robot

Column 2

Smiling Imp

Column 3

Star-Struck Face

This multi-column layout system provides a powerful and flexible way to organize content.

Image Positioning Guide

Image Control System Test

This document demonstrates the new image control system that allows you to specify image width and position using extended markdown syntax.

Syntax

The syntax is: [width, position]

- alt text: Alternative text for the image. Will be displayed as the caption. $KaTeX$ is NOT supported.

- width: Percentage of maximum width (10-100), default is 100

- position: left, center, or right, default is center

Examples

Default (100% width, center position)

This image uses default settings.

50% width, center position

[50, center]

[50, center]

This image is 50% width and centered.

30% width, left position

[30, left]

[30, left]

This image is 30% width and aligned to the left.

40% width, right position

[40, right]

[40, right]

This image is 40% width and aligned to the right.

Width only (default center)

[70]

[70]

This image is 70% width with default center alignment.

Position only (default 100% width)

[left]

[left]

This image uses default 100% width but is left-aligned.

Various width examples

25% width, center

[25, center]

[25, center]

60% width, center

[60, center]

[60, center]

80% width, center

[80, center]

[80, center]

Mixed alignments

Small image, left aligned

[25, left]

[25, left]

Medium image, right aligned

[50, right]

[50, right]

Large image, center aligned

[75, center]

[75, center]

Features

- Responsive: On mobile devices, all images become 100% width and center-aligned

- Hover effects: Images have subtle hover animations

- Consistent styling: All images maintain consistent border radius and shadows

- Flexible syntax: You can specify just width, just position, or both

- Default values: Sensible defaults when parameters are omitted

Technical Details

The system works by:

- JavaScript parsing: A script scans for image elements and looks for control syntax

- CSS classes: Applies appropriate width and position classes

- Container wrapping: Wraps images in containers for better control

- Responsive behavior: Uses CSS media queries for mobile optimization

Code Block Enhancement Feature Test

Author: Jane Doe

This page is for testing the new features of the code block:

- Dark/light mode adaptation

- Automatic text wrapping

- Copy button

Python Code - Long Line Test

def very_long_function_name_to_test_text_wrapping_functionality(parameter_one, parameter_two, parameter_three, parameter_four, parameter_five):

"""

This is a very long function name to test the text wrapping functionality of the code block.

When the code line is too long, it should be able to wrap automatically instead of generating a horizontal scroll bar.

"""

very_long_variable_name_that_should_wrap = "This is a very long string to test the wrapping behavior of the code block when encountering long text, and it should be able to correctly handle the mixed situation of Chinese and English"

result = some_very_long_function_call_that_exceeds_normal_line_length(very_long_variable_name_that_should_wrap, parameter_one, parameter_two)

return result

# Test various syntax highlighting

class DataProcessor:

def __init__(self, data_source="default", max_iterations=1000, learning_rate=0.001):

self.data_source = data_source # Data source configuration

self.max_iterations = max_iterations

self.learning_rate = learning_rate

def process_data_with_very_long_method_name_for_testing_purposes(self):

for i in range(self.max_iterations):

print(f"Processing iteration {i} with learning rate {self.learning_rate} on data from {self.data_source}")JavaScript Code - Complex Example

// This is a JavaScript example to test syntax highlighting in dark and light modes

const createComplexDataVisualizationComponentWithManyProps = ({

dataSource,

chartType = 'line',

colorScheme = 'default',

animationDuration = 300,

responsiveBreakpoints = { mobile: 768, tablet: 1024, desktop: 1200 }

}) => {

const [isLoading, setIsLoading] = useState(true);

const [processedData, setProcessedData] = useState([]);

const [errorMessage, setErrorMessage] = useState("This is a very long error message to test how the code block handles the display and wrapping of long text content");

useEffect(() => {

const fetchAndProcessDataFromRemoteApiWithRetryLogic = async () => {

try {

const response = await fetch(`https://api.example.com/very/long/endpoint/path/that/might/cause/horizontal/scrolling/issues?param1=value1¶m2=value2¶m3=value3`);

const rawData = await response.json();

// Complex logic for processing data

const transformedData = rawData.map(item => ({

...item,

displayName: `${item.firstName} ${item.lastName} - ${item.department} (${item.employee_id})`,

fullAddress: `${item.address.street} ${item.address.number}, ${item.address.city}, ${item.address.country} ${item.address.postalCode}`

}));

setProcessedData(transformedData);

} catch (error) {

console.error('Data acquisition failed:', error.message);

setErrorMessage(`An error occurred while fetching data: ${error.message} - Please check your network connection and try again`);

} finally {

setIsLoading(false);

}

};

fetchAndProcessDataFromRemoteApiWithRetryLogic();

}, [dataSource]);

return (

<div className="complex-visualization-container">

{isLoading && <LoadingSpinner message="Loading data, please wait..." />}

{errorMessage && <ErrorDisplay message={errorMessage} />}

{processedData.length > 0 && <Chart data={processedData} config={chartType} />}

</div>

);

};Bash Script - System Management

#!/bin/bash

# This is a complex system management script to test code highlighting and wrapping functions

SCRIPT_NAME="complex_system_management_script_with_very_long_name_for_testing_purposes.sh"

LOG_FILE="/var/log/system_management_$(date +%Y%m%d_%H%M%S)_detailed_log_with_timestamp.log"

CONFIG_FILE="/etc/system_config/application_settings_production_environment.conf"

function perform_system_backup_with_compression_and_remote_sync() {

local backup_source_directory="$1"

local backup_destination_with_timestamp="/backup/$(date +%Y%m%d_%H%M%S)_system_backup_compressed"

local remote_backup_server="backup-server.company.com:/remote/backup/location/with/deep/directory/structure"

echo "Start system backup operation - Source directory: $backup_source_directory"

echo "Backup target: $backup_destination_with_timestamp"

echo "Remote sync server: $remote_backup_server"

# Create compressed backup

tar -czf "${backup_destination_with_timestamp}.tar.gz" \

--exclude="*.tmp" \

--exclude="*.log" \

--exclude="cache/*" \

--exclude="temp/*" \

"$backup_source_directory" 2>&1 | tee -a "$LOG_FILE"

if [ ${PIPESTATUS[0]} -eq 0 ]; then

echo "Local backup created successfully: ${backup_destination_with_timestamp}.tar.gz" | tee -a "$LOG_FILE"

# Sync to remote server

rsync -avz --progress --stats "${backup_destination_with_timestamp}.tar.gz" "$remote_backup_server" 2>&1 | tee -a "$LOG_FILE"

if [ $? -eq 0 ]; then

echo "Remote sync completed successfully" | tee -a "$LOG_FILE"

else

echo "Warning: Remote sync failed, backup is only saved locally" | tee -a "$LOG_FILE"

fi

else

echo "Error: Local backup creation failed, please check disk space and permissions" | tee -a "$LOG_FILE"

exit 1

fi

}

# Execute backup operation

perform_system_backup_with_compression_and_remote_sync "/var/www/html/application_with_very_long_directory_name"SQL Query - Database Operations

-- Complex SQL query to test syntax highlighting and long line wrapping

SELECT

u.user_id,

u.username,

u.email_address,

u.full_name AS user_display_name,

p.profile_picture_url,

p.bio_description,

COUNT(DISTINCT o.order_id) AS total_orders_count,

SUM(oi.quantity * oi.unit_price) AS total_order_value,

AVG(r.rating_score) AS average_customer_rating,

MAX(o.order_date) AS last_order_date,

STRING_AGG(DISTINCT c.category_name, ', ' ORDER BY c.category_name) AS purchased_categories

FROM

users u

LEFT JOIN user_profiles p ON u.user_id = p.user_id

LEFT JOIN orders o ON u.user_id = o.customer_user_id

LEFT JOIN order_items oi ON o.order_id = oi.order_id

LEFT JOIN products prod ON oi.product_id = prod.product_id

LEFT JOIN product_categories pc ON prod.product_id = pc.product_id

LEFT JOIN categories c ON pc.category_id = c.category_id

LEFT JOIN product_reviews r ON prod.product_id = r.product_id AND r.reviewer_user_id = u.user_id

WHERE

u.account_status = 'active'

AND u.registration_date >= '2023-01-01'

AND (o.order_status IN ('completed', 'delivered') OR o.order_status IS NULL)

AND u.email_address NOT LIKE '%+test%@%'

GROUP BY

u.user_id, u.username, u.email_address, u.full_name, p.profile_picture_url, p.bio_description

HAVING

COUNT(DISTINCT o.order_id) >= 1 OR COUNT(DISTINCT o.order_id) = 0

ORDER BY

total_order_value DESC NULLS LAST,

average_customer_rating DESC NULLS LAST,

u.username ASC;Test Instructions

- Hover Copy Button: Hovering the mouse over the code block should reveal the "Copy" button in the upper right corner

- Theme Switching: When switching between dark/light mode, the code highlight color should change accordingly

- Text Wrapping: Long lines of code should wrap automatically instead of creating a horizontal scroll bar

- Multi-language Support: Different programming languages should have corresponding syntax highlighting

After clicking the copy button, the button text should change to "Copied!", then back to "Copy".

Math Syntax Test

Author: Jane Doe

This is a test file to verify if Hugo can correctly handle symbols like underscores and asterisks within mathematical environments.

Inline Mathematical Formula Test

Here are some inline mathematical formulas containing underscores and asterisks:

- Basic subscripts::$x_i$ 和 $y_{i,j}$

- Complex subscripts:$\sum_{i=1}^{n} x_i$ 和 $\prod_{k=0}^{m} a_k$

- Asterisk operations:$a^* \cdot b^*$ 和 $f^*(x)$

- Mixed symbols:$\alpha_{i,j}^* + \beta_k^{**}$

Block-level Mathematical Formula Test

Matrices and Vectors

$$ \mathbf{A} = \begin{pmatrix} a_{11} & a_{12} & \cdots & a_{1n} \\ a_{21} & a_{22} & \cdots & a_{2n} \\ \vdots & \vdots & \ddots & \vdots \\ a_{m1} & a_{m2} & \cdots & a_{mn} \end{pmatrix} $$Summation and Integration

$$ \sum_{i=1}^{n} \sum_{j=1}^{m} a_{i,j} x_i y_j = \int_{-\infty}^{\infty} f^*(t) e^{-i\omega t} dt $$Complex Mathematical Expressions

$$ \frac{\partial^2 u}{\partial t^2} = c^2 \nabla^2 u + f(x_1, x_2, x_3, t) $$Machine Learning Formula

$$ \mathcal{L}(\theta) = -\frac{1}{N} \sum_{i=1}^{N} \sum_{c=1}^{C} y_{i,c} \log(\hat{y}_{i,c}) + \lambda \sum_{j=1}^{d} \theta_j^2 $$Probability Distribution

$$ p(x|\mu, \sigma^2) = \frac{1}{\sqrt{2\pi\sigma^2}} \exp\left(-\frac{(x-\mu)^2}{2\sigma^2}\right) $$Mixed Content Test

在文本中,我们有 $E = mc^2$,而损失函数为:

$$ \mathcal{L} = \sum_{i=1}^{n} \ell(y_i, \hat{y}_i) + \frac{\lambda}{2} \|\theta\|_2^2 $$其中 $\ell$ 是损失函数,$\lambda > 0$ 是正则化参数。

这段文本包含了粗体和斜体,但数学公式中的下划线应该不受影响。

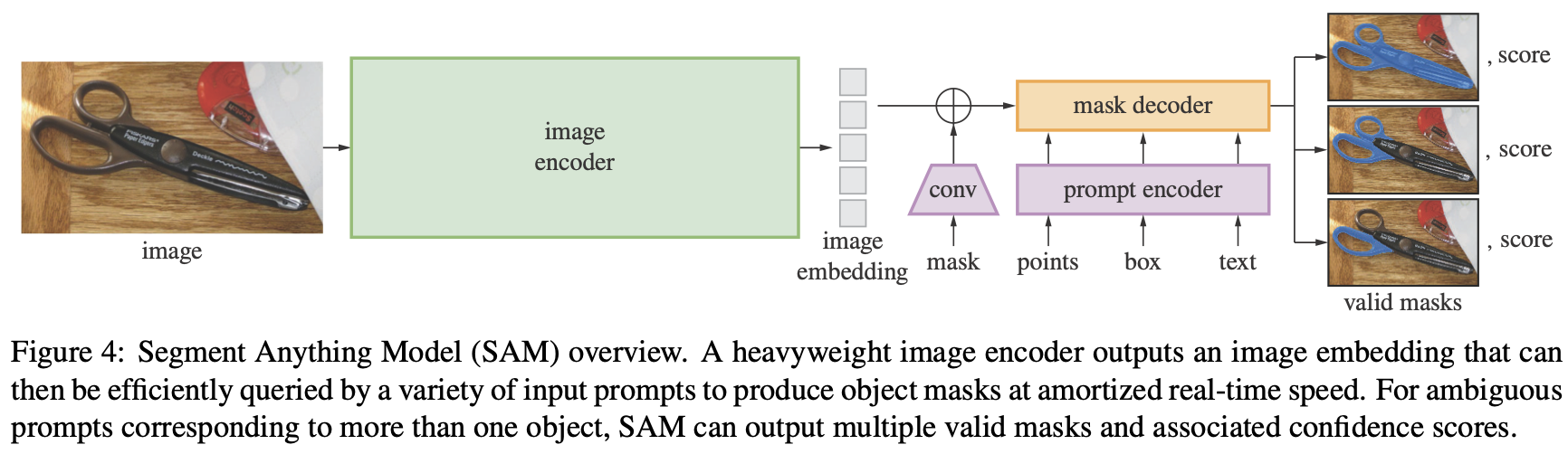

Segment Anything

We introduce the Segment Anything (SA) project: a new task, model, and dataset for image segmentation. Using our efficient model in a data collection loop, we built the largest segmentation dataset to date (by far), with over 1 billion masks on 11M licensed and privacy respecting images. The model is designed and trained to be promptable, so it can transfer zero-shot to new image distributions and tasks. We evaluate its capabilities on numerous tasks and find that its zero-shot performance is impressive -- often competitive with or even superior to prior fully supervised results. We are releasing the Segment Anything Model (SAM) and corresponding dataset (SA-1B) of 1B masks and 11M images at segment-anything.com to foster research into foundation models for computer vision.

DINO: DETR with Improved DeNoising Anchor Boxes for End-to-End Object Detection

We present DINO (DETR with Improved deNoising anchOr boxes), a state-of-the-art end-to-end object detector. in this paper. DINO improves over previous DETR-like models in performance and efficiency by using a contrastive way for denoising training, a mixed query selection method for anchor initialization, and a look forward twice scheme for box prediction. DINO achieves 49.4AP in 12 epochs and 51.3AP in 24 epochs on COCO with a ResNet-50 backbone and multi-scale features, yielding a significant improvement of +6.0AP and +2.7AP, respectively, compared to DN-DETR, the previous best DETR-like model. DINO scales well in both model size and data size. Without bells and whistles, after pre-training on the Objects365 dataset with a SwinL backbone, DINO obtains the best results on both COCO val2017 (63.2 AP) and test-dev (63.3AP). Compared to other models on the leaderboard, DINO significantly reduces its model size and pre-training data size while achieving better results. Our code will be available at this https URL.

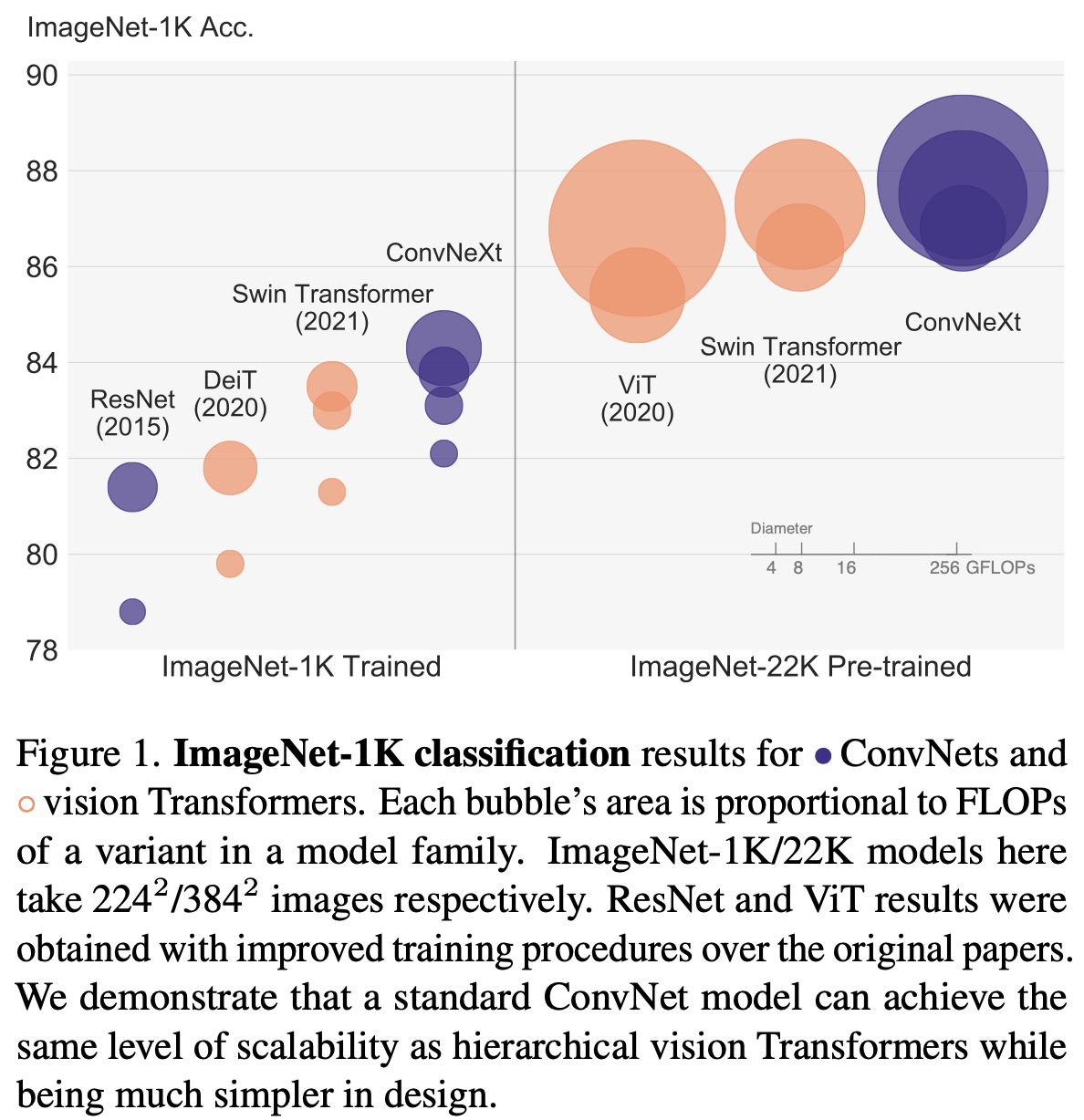

A ConvNet for the 2020s

Authors: Zhuang Liu, Hanzi Mao, Chao-Yuan Wu, Christoph Feichtenhofer, Ross Girshick, Kaiming He, Jane Doe

The "Roaring 20s" of visual recognition began with the introduction of Vision Transformers (ViTs), which quickly superseded ConvNets as the state-of-the-art image classification model. A vanilla ViT, on the other hand, faces difficulties when applied to general computer vision tasks such as object detection and semantic segmentation. It is the hierarchical Transformers (e.g., Swin Transformers) that reintroduced several ConvNet priors, making Transformers practically viable as a generic vision backbone and demonstrating remarkable performance on a wide variety of vision tasks. However, the effectiveness of such hybrid approaches is still largely credited to the intrinsic superiority of Transformers, rather than the inherent inductive biases of convolutions. In this work, we reexamine the design spaces and test the limits of what a pure ConvNet can achieve. We gradually "modernize" a standard ResNet toward the design of a vision Transformer, and discover several key components that contribute to the performance difference along the way. The outcome of this exploration is a family of pure ConvNet models dubbed ConvNeXt. Constructed entirely from standard ConvNet modules, ConvNeXts compete favorably with Transformers in terms of accuracy and scalability, achieving 87.8% ImageNet top-1 accuracy and outperforming Swin Transformers on COCO detection and ADE20K segmentation, while maintaining the simplicity and efficiency of standard ConvNets.

[80, center]

[80, center]

Learning Transferable Visual Models From Natural Language Supervision

State-of-the-art computer vision systems are trained to predict a fixed set of predetermined object categories. This restricted form of supervision limits their generality and usability since additional labeled data is needed to specify any other visual concept. Learning directly from raw text about images is a promising alternative which leverages a much broader source of supervision. We demonstrate that the simple pre-training task of predicting which caption goes with which image is an efficient and scalable way to learn SOTA image representations from scratch on a dataset of 400 million (image, text) pairs collected from the internet. After pre-training, natural language is used to reference learned visual concepts (or describe new ones) enabling zero-shot transfer of the model to downstream tasks. We study the performance of this approach by benchmarking on over 30 different existing computer vision datasets, spanning tasks such as OCR, action recognition in videos, geo-localization, and many types of fine-grained object classification. The model transfers non-trivially to most tasks and is often competitive with a fully supervised baseline without the need for any dataset specific training. For instance, we match the accuracy of the original ResNet-50 on ImageNet zero-shot without needing to use any of the 1.28 million training examples it was trained on. We release our code and pre-trained model weights at this https URL.

An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale

Authors: Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov, Dirk Weissenborn, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold, Sylvain Gelly, Jakob Uszkoreit, Neil Houlsby, Jane Doe

While the Transformer architecture has become the de-facto standard for natural language processing tasks, its applications to computer vision remain limited. In vision, attention is either applied in conjunction with convolutional networks, or used to replace certain components of convolutional networks while keeping their overall structure in place. We show that this reliance on CNNs is not necessary and a pure transformer applied directly to sequences of image patches can perform very well on image classification tasks. When pre-trained on large amounts of data and transferred to multiple mid-sized or small image recognition benchmarks (ImageNet, CIFAR-100, VTAB, etc.), Vision Transformer (ViT) attains excellent results compared to state-of-the-art convolutional networks while requiring substantially fewer computational resources to train.

Resources

Paper | Code | Project Page | Google Research

Deep Residual Learning for Image Recognition

Deeper neural networks are more difficult to train. We present a residual learning framework to ease the training of networks that are substantially deeper than those used previously. We explicitly reformulate the layers as learning residual functions with reference to the layer inputs, instead of learning unreferenced functions. We provide comprehensive empirical evidence showing that these residual networks are easier to optimize, and can gain accuracy from considerably increased depth. On the ImageNet dataset we evaluate residual nets with a depth of up to 152 layers---8x deeper than VGG nets but still having lower complexity. An ensemble of these residual nets achieves 3.57% error on the ImageNet test set. This result won the 1st place on the ILSVRC 2015 classification task. We also present analysis on CIFAR-10 with 100 and 1000 layers.

The depth of representations is of central importance for many visual recognition tasks. Solely due to our extremely deep representations, we obtain a 28% relative improvement on the COCO object detection dataset. Deep residual nets are foundations of our submissions to ILSVRC & COCO 2015 competitions, where we also won the 1st places on the tasks of ImageNet detection, ImageNet localization, COCO detection, and COCO segmentation.